*Editor’s note: K-VIBE invites experts from various K-culture sectors to share their extraordinary discovery about the Korean culture.

Matthew Lim's AI Innovation Story: The Future of AI Lies in What We Write Today (Part 1)

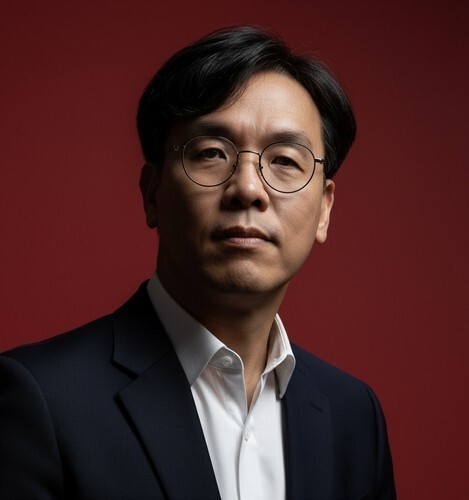

By Matthew Lim, AI expert and director of the Korean Association of AI Management (Former Head of Digital Strategy Research at Shinhan DS)

In recent months, a series of ominous warnings has emerged from the heart of the artificial intelligence industry. In January this year, Elon Musk said, “We have exhausted the cumulative sum of human knowledge for AI training,” adding that “this problem already surfaced last year.” Rather than an exaggeration, the remark reflected a growing sense of crisis that had already been circulating within the industry.

Demis Hassabis, CEO of Google DeepMind and a Nobel laureate, also cautioned that “we should not expect AI to continue advancing at the same rapid pace as in recent years.”

At first glance, the notion that AI is running out of data to learn from seems counterintuitive. Hundreds of millions of posts, photos and videos are uploaded to the internet every day, and the volume of digital information continues to grow at an exponential rate. However, the reality seen by AI researchers and companies is different. The problem is not the sheer quantity of data, but the rapid decline in the amount of high-quality data suitable for training.

|

| ▲ This Yonhap file photo shows Google DeepMind CEO Demis Hassabis. (PHOTO NOT FOR SALE) (Yonhap) |

◇ Data Depletion in Numbers

The history of large language models is, in many ways, a history of ever-growing data consumption. GPT-3, released in 2020, was trained on about 300 billion tokens (units of words or text fragments). Just three years later, in 2023, GPT-4 was estimated to have been trained on about 12 trillion tokens — an increase of nearly 40 times. Meta’s Llama 3, launched in April 2024, was trained on more than 15 trillion tokens, and 일부 Llama 4 models released the following year reportedly used over 40 trillion tokens, nearly doubling again in just one year.

With each new generation of models, data requirements are rising at an exponential pace. Even within the GPT series alone, new versions such as GPT-4.1, GPT-4.1 Mini, GPT-5, GPT-5.1 and GPT-5.2 have appeared at intervals of just several months. While this rapid cycle of updates reflects fierce competition, it is also the result of a structural dependence on continuously feeding models with ever larger — or more refined — datasets to achieve better performance.

The problem is that the amount of high-quality data newly produced by humans grows at an annual rate of only about 4 percent, far slower than the pace at which cutting-edge models consume data in a single round of training. In fact, multiple research institutions estimate that less than 10 percent of publicly available, human-generated text on the internet is of sufficiently high quality for AI training.

Once texts riddled with spelling and grammatical errors, spam, duplicated or low-quality content, hate speech and simple advertising slogans are filtered out, the amount of usable material is far smaller than it appears. Moreover, when data that cannot be freely used due to copyright and privacy concerns is excluded, the pool of training material legally available for commercial AI models shrinks even further.

This is why major AI companies in recent years have rushed to sign data licensing agreements with media outlets, publishers and music companies. It signals that most publicly accessible web data has already been exploited, and that the industry has entered a stage where it must now pay for refined, high-quality content.

◇ Synthetic Data as a Solution — and Its Limits

To overcome this bottleneck, the AI industry has turned to “synthetic data” as an alternative. This refers to the practice of using text, images or code generated by AI itself as new training data. The method has long been used in fields where real user data is difficult to collect or restricted due to sensitive information, and it has recently been introduced in earnest into large language model training as well.

Market research firm Gartner estimates that about 60 percent of the data used in AI projects in 2024 already took some form of synthetic data. Major big-tech firms are utilizing synthetic data across a wide range of areas, including manufacturing process simulations, autonomous driving, medical diagnostics and financial risk analysis. In the field of language models as well, the blending of human-generated data with AI-generated data in training has become increasingly common.

(To be continued)

(C) Yonhap News Agency. All Rights Reserved