*Editor’s note: K-VIBE invites experts from various K-culture sectors to share their extraordinary discovery about the Korean culture.

Matthew Lim's AI Innovation Story: Is AI Regulation Necessary?

By Matthew Lim, AI expert and director of the Korean Association of AI Management (Former Head of Digital Strategy Research at Shinhan DS)

In August, South Korea was shaken by a shocking digital sex crime. Deepfake pornography was indiscriminately distributed via Telegram, targeting over 100 educational institutions and military bases across the country.

One of the victims, a graduate of Inha University, discovered that her face had been manipulated into obscene videos, and her personal information was leaked. More disturbingly, the perpetrator not only mocked but also threatened the victim, leading to secondary harm.

What is particularly concerning is that these crimes are not limited to adults but also target middle and high school students. Over the past three years, 59.8% of deepfake sex crime victims reported to the police were teenagers, with cases increasing 3.4 times in just two years.

This incident starkly demonstrates the grave societal problems that can arise from the misuse of AI technology, especially deepfakes. Such crimes severely violate individual privacy and dignity, posing even greater threats to vulnerable groups like adolescents.

In this context, it is crucial to engage in serious discussions about how to regulate and manage AI technology while acknowledging its advancements and benefits. AI regulation is not merely a limitation on technology but an essential task to safeguard society's safety and ethics. I have gathered various opinions on the appropriate extent of such regulations.

|

| ▲ This image depicts the deepfake technology. (Yonhap) |

◇ The Scope of Government Regulation on AI Use

When the government enforces regulations on AI services for the general public, the scope and effectiveness of such regulations must be carefully considered.

Regulations can undoubtedly help prevent crimes and ensure social safety. However, excessive regulation could risk limiting users' rights and stifling creative use.

For example, while strict regulation of deepfake technology could prevent its misuse, it may also discourage legitimate and creative applications. Small creators or ordinary users may face restrictions in using AI tools for content creation or everyday purposes.

What is critical here is the introduction of stricter penalties for the malicious use of AI technologies. It is not enough to simply regulate the misuse of AI; there should be a clear effort to increase the severity of punishments for such crimes.

For instance, stronger penalties should be introduced for crimes such as deepfake-related sexual offenses or fraud, sending a strong warning to potential offenders.

The issue of juvenile offenders is particularly severe. Approaching this by merely lowering the age of criminal responsibility, as has been debated for years, is insufficient.

If children are deemed incapable of responsibility due to their age, perhaps it is the parents, as their guardians, who should be held accountable. After all, the responsibility for educating and managing children lies with the parents. There cannot be a world where victims exist, but no offenders are held accountable.

It is unjust that while victims are left with lifelong scars, perpetrators continue with their daily lives without consequence.

It seems only natural to expect that harsher punishments for crimes would help deter such offenses from occurring in the first place. To prevent the misuse of AI technology, mere regulation is no longer enough.

Given the far-reaching impact of AI misuse, which can extend beyond individuals to society at large, it is imperative to enforce strong legal sanctions.

|

| ▲ Political activists call for counter-measure policies against deepfake crimes in Seoul on Sept. 12, 2024. (Yonhap) |

◇ The Effectiveness and Fairness of AI Regulation

If regulations are too strict or complicated, users may find ways to circumvent them, creating loopholes in the law. For regulations to be truly effective, a balance between technical understanding and legal standards is necessary. Understanding how users engage with AI and creating reasonable regulations that align with this usage is key.

The fairness of regulation is another crucial issue. Regulations must not disproportionately disadvantage specific groups or individuals. For instance, small creators using AI tools for content creation should be protected from excessive regulation that could stifle their activities. To prevent a situation where only large corporations or those with advanced technology can freely use AI, regulations must be designed with equity in mind.

Finally, regulations must evolve in tandem with the rapid advancement of AI technology. As AI develops daily, new issues will inevitably emerge. Therefore, regulations should not remain static; they must be continually reviewed and adjusted to keep pace with technological progress.

While AI service regulations are necessary, their scope and implementation require a cautious approach. Regulations should ensure social safety without stifling users' rights and creativity. At the same time, strong penalties must be introduced to deter malicious use of AI, minimizing crime and social disruption.

AI undoubtedly has the potential to transform society for the better, but if not properly managed, it could instead lead to societal chaos. Thus, regulation and punishment should be designed in a way that is reasonable and fair from the user's perspective, and flexible enough to adapt to the evolving technological environment.

Recently, the Wall Street Journal pointed out that South Korea is the most vulnerable country to deepfake pornography. We must avoid further international embarrassment. It is hoped that lawmakers will stop wasting time on political squabbles and swiftly pass the necessary laws for the people.

The term "golden time" is not exclusive to the medical field—it's a concept that applies here as well.

|

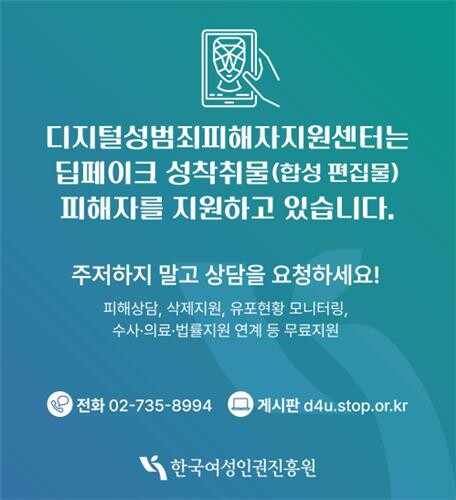

| ▲ A poster on the website for the Advocacy Center for Online Sexual Abuse Victims states that it supports the victims of deepfake sexual exploitation crimes. (PHOTO NOT FOR SALE) (Yonhap) |

(C) Yonhap News Agency. All Rights Reserved